Table of contents

In this article, we are going to learn about the architecture of the most robust and efficient container orchestration platform i.e. Kubernetes.

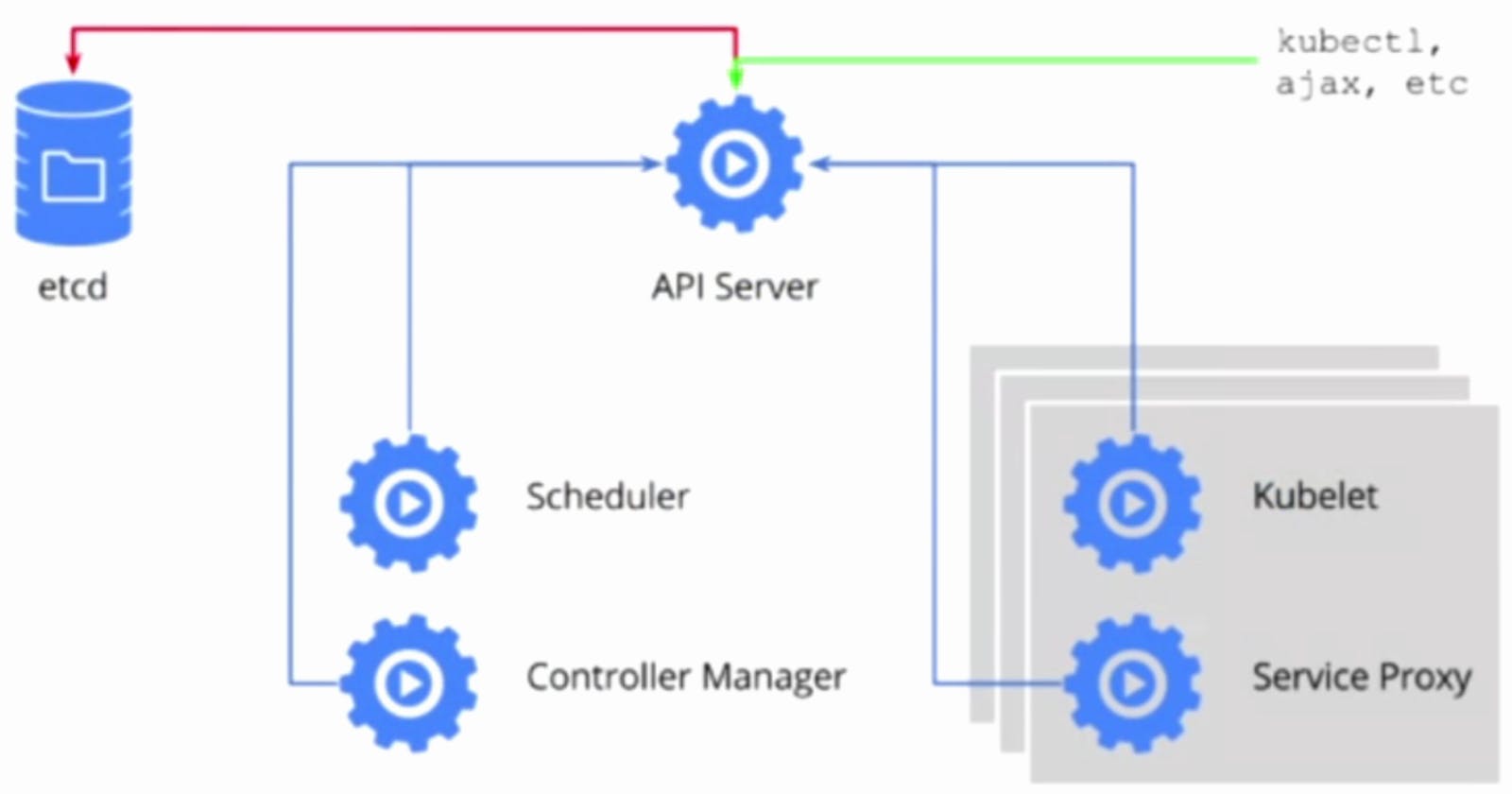

As we can see in the architecture there is a control plane and a data plane which in k8's terms is known as the master node and worker nodes respectively.

To interact with our Kubernetes cluster we use the kubectl command line utility.

Master node:

Master node or nodes form the control plane in Kubernetes, it consists of various components like kube-api server, kube-controller manager, kube-scheduler and etcd datastore. We will learn more about these components as below:

Kube-api server:

Kube-api server is the entry point of our k8s cluster. When we try to deploy/ query different kubernetes objects using kubectl we connect to kube-api server which responds to our queries.Kube api server listens on post 6443. If the Kubernetes cluster is boostrapped using kube-adm tool then kube api server will be deployed as a pod in kube-system namespace.

kube-controller-manager:

kube-controller-manager can be defined as the hub containing various kubernetes controllers. while a kubernetes controller is the process that monitors particular kubenetes components and acts to make sure the system is in a proper functioning state. One such example of kubernetes controller is a replication controller which monitors replica sets and whenever required, makes sure that the pods which are part of these replica sets are auto-healed. The kube-controller manager listens on port 10257. If the Kubernetes cluster is bootstrapped using the kube-adm tool then the kube controller manager will be deployed as a pod in the kube-system namespace. We can manage controllers within the kube-controller manager with the '--controller' option in its service file by enabling or disabling a controller.

Cloud-controller-manager:

cloud-controller-manager lets you integrate kubernets cluster into the cloud provider API while separating components that only interact with cloud providers from those that only interact with your cluster.

Cloud-controller-manager runs only the controllers that are specific to your cloud provider. This component is not present for your on premise cluster or cluster installed for learning purposes on your system.

Cloud-controller-manager combines logically independent control loops into a single binary which then can be run as a single process.

kube-scheduler:

Whenever we deploy a new pod to kubernetes and the request goes to kube-api server at that time it's the kube-schedulers responsibility to make sure that, the pod ends up on the right node. While selecting a node for a pod kube schedule takes into consideration various aspects like pod resource requirements, node affinity, taints and tolerations, etc. Kube-scheduler listens on port 10259. If the Kubernetes cluster is bootstrapped using the kube-adm tool then the kube-scheduler will be deployed as a pod in the kube-system namespace. If we want to use a custom kube-scheduler instead of the default one then we can do that using the "ScheduleName" attribute in the pod manifest's spec section.

ETCD:

ETCD is the kubernetes key-value datastore. It contains information about all the cluster resources and objects. Whenever we make any query with kubectl like "kubectl get pods" then request goest to the kube-API-server and then the kube api server queries etcd database for the pods in the default namespace and returns us the result. Any changes done in our K8s environment are stored in etcd datastore. In order to interact with etcd datastore, etcdctl command line utility is used. If the Kubernetes cluster is bootstrapped using the kube-adm tool then etcd will be deployed as a pod in the kube-system namespace. ETCD listens on port 2380 while if we have multi-master cluster then ETCD will be deployed on all masters and within peers it will listen on port 2379.

Worker nodes:

Worker nodes formulate the data plane of our Kubernetes platform. It comprises components like kubelet, kube-proxy, and container runtime.

Kubelet:

Kubelet is an important K8s data plane component that runs on the worker nodes. It is responsible for registering worker nodes in the K8s cluster. Whenever a kubelet receives a pod request from the kube-api-server, it instructs the underlying container runtime to pull the required docker image and run that container inside the pod. Kubelet then monitors this pod and provides pod status to kube-api-server. Kubelet listens on port 10250. We have to always install Kubelet manually using Kubelet binaries.

Kube-proxy:

Whenever a pod is deployed it receives an ip address through different network plugins complying with CNI standards. But since pods are ephemeral once it is stopped and recreated again, it receives a new IP address. Due to this behavior best practice is to use a service with an ip address that will have pods as its endpoints. But these services are not the actual K8s objects but the iptables rules created on the hosts by kube-proxy which enables pods to send traffic to a service that will be translated to the endpoint pod's IP address. Services ip address pool can be managed through kube-API-server service options. Kube-proxy listens on port 10256. Kube-proxy is deployed as the daemonset as we it's one instance on all the nodes.

Container runtime:

For running a pod kubelet passes it to the container runtime which is responsible for running the containers inside a pod. Traditionally Kubernetes had built-in support for docker through dockershim as a container runtime. But over the period of time, this support has been deprecated for reducing security vulnerability and improving resource utilization by removing unnecessary docker components in Kubernetes. Now we can use any OCI-compliant container runtime with docker through the CRI plugin like containerd and cri-o. Irrespective of which container runtime we are using with Kubernetes, we are not going to interact with it as it is the job of Kubelet.